THE HUMAN HEARING SYSTEM PART 2: THE SOFTWARE

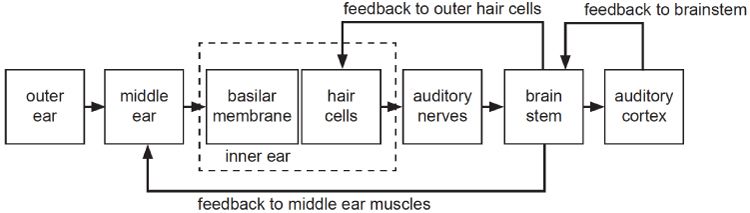

In the previous micro tutorial, we briefly described the human hearing system’s ‘hardware’: the outer ear (ear shell & ear canal), middle ear (ear drum & inner bone structure), inner ear (cochlea) and the nerve connection to the brain.

The adult human brain comprises approximately one hundred billion (1011) brain cells, called neurons, capable of transmitting and receiving bio-electrical (‘digital’) signals between each other. Each neuron has connections with thousands of nearby neurons through short connections (dendrites). Some neurons connect to sensory and motor organs in the body through additional long connections (axons). With an estimated total of five trillion (5x1014) connections, the human brain has massive bio-electrical processing power to manage the human body processes, memory and ‘thinking’. And, of course, hearing.

In the ‘multiconnector’ of our brain - the brain stem - some auditory processing is already performed. The brain stem analyses the coded signals from the cochlea to provide feedback back to the ear. One such ‘feedback loop’ is to the middle ear muscles, tuning its bone structure to optimise the middle ear’s transfer function. This feedback loop also helps to protect the ear from loud environments by loosening the tension in the bone structure when these occur, dampening the transfer function. The second feedback loop sends neural signals back to the cochlea to interact with the hair cells and improve their ‘tuning’.

The brain stem’s pre-processing also includes analysis of the two pulse streams: one from each ear. The spectrum over time is analysed for both streams and then compared. If there are spectral similarities, then the level difference and time difference is analysed. If a sound comes from the front of the head, both ears will receive exactly the same signal (depending on the hair style of course!). But if a sound comes from the far left (or right), the right (or left) ear will be shadowed by the head, dampening high frequencies and the overall level. Also, since the average human head is about 21 centimetres wide, there will be a time difference of around 600 microseconds because sound waves travel at a limited speed of around 340m/s. These two parameters – level difference and time difference – are used to estimate the original position of the sound, providing a resolution of a few degrees in optimal circumstances.

After pre-processing, the brain stem sends information to the auditory cortex, situated in the so called ‘Brodmann areas’ 41 and 42 in the human brain. Rather than interpreting, storing and recalling each of the millions of nerve impulses per second – which might possibly exceed the brain’s processing and memory capacity - the incoming information is converted into hearing sensations that can be enjoyed and stored as information units with a higher abstraction than the original incoming informa¬tion. These are known as aural activity images and aural scenes. It’s these images and scenes that we can understand, remember and enjoy.

First, the auditory cortex constructs an aural activity image, including the main perception parameters of audio events such as loudness, pitch, timbre and localisation. These detailed descriptions are stored in a short term memory in the brain, thought to be able to hold image details for about 20 seconds.

Comparing the aural activity images with previously stored images, other sensory images (e.g. vision, smell, taste, touch, balance) and overall context, a further aggregated and compressed aural scene is created to represent the meaning of the hearing sensation. The aural scene is constructed using efficiency mechanisms - selecting only the relevant information in the aural activity image and correction mechanisms - filling in gaps and repairing distortions in the auditory activity images. The aural scenes can be remembered for many years, depending on how important they are for the individual. Very important scenes are finally stored in long-term memory to become part of the ‘soundtrack of our lives’.

Aural scene images are triggered by audio events: hearing sound. But their meaning, and the way how they are stored, is strongly influenced by other sensory images. For example, the ‘McGurk-MacDonald’ experiment describes how the word ‘Ba’ can be perceived as ‘Da’ or even ‘Ga’ when the sound is dubbed to a film clip of a person’s mouth pronouncing the word in one of the latter ways. Apparently, the visual information in this case overrides the aural information.

Professional listeners can train both aural activity imaging and aural scene processes, to focus in on the audio properties, remember more details, and to store them for a longer time.